Accuracy of Property Data – Important as Ever?

This is a guest post authored by Robert T. Murphy

I have had some discussion lately with valuation industry participants regarding the importance of the appraiser obtaining accurate property data as well as understanding its source for comparable properties considered in an appraisal. These discussions are the result, in part, of the addition of Advisory Opinion #37 (AO-37) to the Uniform Standards of Professional Appraisal Practice (USPAP) coming in January 2018.

A lot has been written about AO-37 and I have no intent to rehash the Opinion other than to say that it certainly addresses the issue that the credibility of any analysis incorporated into a specific tools output, in part, depends on the quality of its data. It is important to note that AO-37 isn’t concerned with just regression type tools but also, as addressed in an illustration, those tools that automatically input information from an MLS. It is also clear that the appraiser must be the one controlling the input.

Government Sponsored Enterprises (GSEs) Fannie Mae and Freddie Mac are also concerned about the quality of property data. Whether its referred to as “data integrity” or “data accuracy” the end meaning is the same. Both GSEs currently have analytical tools to assist their customers in analyzing appraisal reports. Fannie Mae has Collateral Underwriter (CU) while Freddie Mac’s offering is Loan Collateral Advisor. While these are two independent unrelated systems, both attempt to identify underlying collateral risk by analyzing data integrity/accuracy issues.

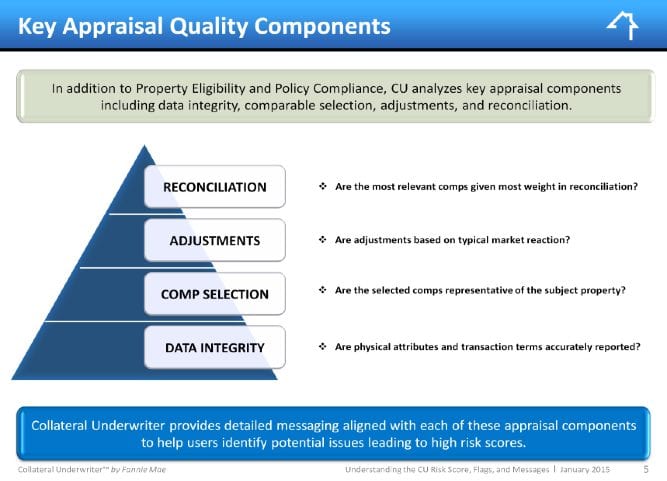

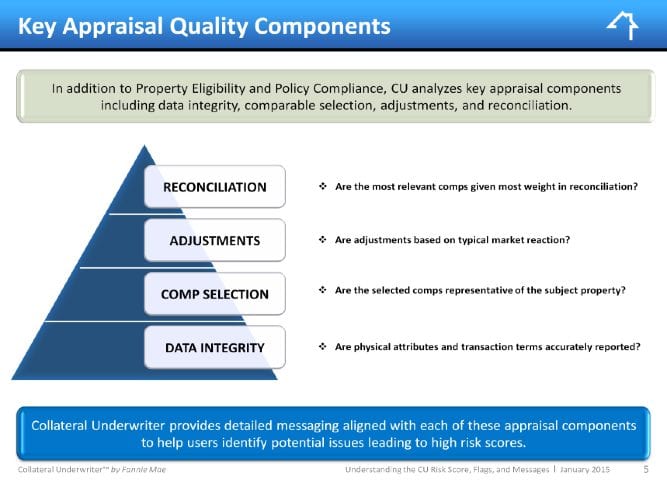

In Fannie Mae’s Collateral Underwriter training – Understanding CU Risk Scores, Flags, and Messages https://www.fanniemae.com/content/recorded_tutorial/collateral-underwriter-risk-score-flags-messages – they specifically address the underlying factors considered in the development of the CU Risk Score. One of those factors is Appraisal Quality which in part includes Data Integrity. The following excerpt is directly from their training material available on their website.

They also go on to state:

“First, when we look at Data Integrity, we’re looking to determine if the physical attributes and transaction terms of the subject property and comparables are accurately reported. This is the bottom of the pyramid here because it is the foundation for any appraisal report. We all know the old saying “garbage in, garbage out”. If the subject or comps are not accurately represented, it can influence our judgment of the entire appraisal report. It affects whether or not comps look similar to the subject, the direction and magnitude of the adjustments, and which comps should receive most weight in reconciliation.”

With respect to Freddie Mac’s Loan Collateral Advisor they state in their publication Understanding UCDP Proprietary Risk Score Messages, which is available on their website at http://www.freddiemac.com/learn/pdfs/uw/ucdp_riskscores.pdf, that there are two risk scores indicated, one being a Valuation Risk Score and the other being Appraisal Quality Risk Score. The publication further provides the following:

“Appraisal Quality Risk Score:

- Is a risk measure pertaining to the appraisal quality, data accuracy, and completeness of the appraisal report. The lower the risk score, the lower the risk of a significant appraisal quality defect or deficiency.

- Assesses multiple components of the appraisal to help you determine whether appraisals adhere to our standards and guidelines.

- Is influenced by many factors, including the integrity of the appraisal data, the relevance of the comparable sales selected relative to the pool of available sales, and the reasonableness and supportability of the adjustments.”

What is an appraiser to do?

As we all know the availability and quality of property data varies greatly throughout the country. Taking into consideration the preceding discussion on USPAP, Fannie Mae, and Freddie Mac I believe it is incumbent upon to the appraiser to ensure they have access to the most complete and accurate sources of data available for the geographical areas in which they complete appraisals. AO-37 makes it clear that it is important not only to control input data but to also to have an understanding of the source data.

Use of Multiple Listing Services (MLS)

With the growth of and consolidation/mergers of MLS’s, along with technological advances over the years, they are without a doubt one of the main sources of property data and information for appraisers. Aside from the usual input errors which can occur, the accuracy of some MLS data may differ depending on the format in which it is accessed. Lets take a brief look at some of these formats which are File Transfer Protocol (FTP), Internet Data Exchanges (IDX), and Real Estate Transaction Standard (RETS).

One of the earliest methods of property related data delivery was FTP which was used to transfer files from one host to another. It, for years, was the standard for transferring property data files. One issue with FTP is that there are no set standards between MLS’s which means it can become costly. The data transferred is somewhat straight forward – meaning that many fields are not included. Additionally, in order to update an individual file, the entire file is moved all at once. This means that the entire database is moved in bulk each time the records need to be updated – certainly not efficient and can be very time consuming. As a result updates tend to be less frequent.

Many of us remember the MLS books – I have many of fond memories perusing the pages of those books for comparables and leads. Fast forward – that same information and then some is now available on multiple websites. That was made possible by IDX which is the data exchange between an MLS board’s database and a broker/realtor’s or other entities website. For the first time, it was easy for the public to access large numbers of homes for sale. As home buyers became more Internet-savvy, IDX evolved to provide more options. Agents and brokers wanted more than the basic search products their MLS offered, so a new raw IDX data feed option was created. Raw data feeds allow agents and brokers to download all available IDX listings in a simple format (like an Excel spreadsheet) that could be used with custom home search applications. For all of the advantages, one of the major issues with IDX data is that it tends to be incomplete regarding certain fields such as sales history and status’s.

RETS is another method which was developed specifically for the Real Estate Industry to request and receive data.

RETS is used to give brokers, agents and third parties access to listing and transaction data. MLS’s nationwide have moved to adopt RETS as the industry standard because it drastically simplifies the process of getting data from an MLS to an agent/broker’s site or a third-party vendor. RETS allows the user to customize how the data is to be displayed. It also provides the most currently available data in that it is constantly updated.

RETS provides the user with data that is both more accurate and easy to work with. RETS data is designed to match each MLS’s unique practices so it typically resembles the field names and option values more closely to what is seen when logged into the MLS itself. RETS is also easier for third-party vendors to troubleshoot than a FTP feed, so any issues can usually be diagnosed and corrected quickly.

Overall, RETS data tends to be extremely robust, typically contains most fields (including the property sales history and status’s) and is easy to access and support. It also makes it possible to get specific pieces of data (rather than an entire/large file) and data can be accessed on demand at any time of the day. Only the data that have been recently added or changed will need to be updated. This means that the data can be refreshed more regularly, thereby providing more current data.

If MLS data is being used for anything other than providing listings for public website display, RETS is the best method for MLS data access and retrieval. Additionally, since access to the MLS’s RETS server requires an agreement outlining the permitted use, fees (if any) and redistribution provisions of the data, it can be, and is, used for various purposes.

However, one of the main stumbling blocks with RETS is that the data feed itself is impossible to use without other software to understand it.

This is where a company such as DataMaster comes into play. For those who have never heard of DataMaster, their system allows the RETS data to be converted and transferred into an understandable format. This in turn allows appraisers to complete appraisal form reports quicker and more easily while using the most accurate data available. With the appraiser in control of comparable selections, their Patented process provides the ability to simultaneously download MLS data as well as Public Record information into form reports while being UAD compliant. This allows appraisers to save time and money when it comes to completing their reports.

All of this is possible because DataMaster actually goes out to local MLS’s through the “front-door” and sign contracts allowing them the access and use of data directly from the RETS server for that particular MLS. What separates DataMaster from other vendors in this space is that as a result of their relationships with local MLS’s there is no “gray data” or questionable permitted use of data involved – you can trust the information is the most accurate, timely and properly formatted information available from the MLS and Public Record.

Conclusion

Having spent approximately 40 years in the valuation industry I like to, from time-to-time, take a look back specifically to see what was being taught then, which honestly set a foundation for me. While contemplating this post I took a look at The Appraisal of Real Estate, Seventh Edition (1978) by the American Institute of Real Estate Appraisers and came across the following sentence in Chapter 15 – The Market Data Approach: Principles:

“Since no conclusion is better than the quality of data on which it is predicated, the appraiser screens and analyzes all data to establish its reliability and applicability before using it as the basis for a value indication in the market data approach.”

So, the answer to the question of the importance of accurate data is an unequivocal “yes” it is indeed as important as ever!

About the Author:

Robert T. Murphy is a senior valuation executive with 40 years experience in the valuation industry. Currently Mr. Murphy is President of Collateral Advisors LLC an independent consulting firm focusing on a wide range of valuation related issues including but not limited to valuation techniques, policy, and compliance as well as data integrity and technology related issues. Mr. Murphy was most recently Director of Property Valuation and Eligibility at Fannie Mae.

(DataMaster USA is a client of Collateral Advisors LLC)